( Twenty five minute read)

On 25 January 1979, Robert Williams (USA) was struck in the head and killed by the arm of a 1-ton production-line robot in a Ford Motor Company casting plant in Flat Rock, Michigan, USA, becoming the first fatal casualty of a robot. The robot was part of a parts-retrieval system that moved material from one part of the factory to another.

Uber and Tesla have made the news with reports of their autonomous and self-driving cars, respectively, getting into accidents and killing passengers or striking pedestrians.

The death’s however, was completely unintentional but give us a glimpse into the world we might inherit, or at least into how we are conceiving potential futures for ourselves.

By 2040, there is even a suggestion that sophisticated robots will be committing a good chunk of all the crime in the world. At the heart of this debate is whether an AI system could be held criminally liable for its actions.

Where’s there’s blame, there’s a claim. But who do we blame when a robot does wrong?

Among the many things that must now be considered is what role and function the law will play.

So if an advanced autonomous machine commits a crime of its own accord, how should it be treated by the law? How would a lawyer go about demonstrating the “guilty mind” of a non-human? Can this be done be referring to and adapting existing legal principles?

An AI program could be held to be an innocent agent, with either the software programmer or the user being held to be the perpetrator-via-another.

We must confront the fact that autonomous technology with the capacity to cause harm is already around.

Whether it’s a military drone with a full payload, a law enforcement robot exploding to kill a dangerous suspect or something altogether more innocent that causes harm through accident, error, oversight, or good ol’ fashioned stupidity.

None of these deaths are caused by the will of the robot.

Sophisticated algorithms are both predicting and helping to solve crimes committed by humans; predicting the outcome of court cases and human rights trials; and helping to do the work done by lawyers in those cases.

The greater existential threat, is where a gap exists between what a programmer tells a machine to do and what the programmer really meant to happen. The discrepancy between the two becomes more consequential as the computer becomes more intelligent and autonomous.

How do you communicate your values to an intelligent system such that the actions it takes fulfill your true intentions?

The greater threat is scientists purposefully designing robots that can kill human targets without human intervention for military purposes.

That’s why AI and robotics researchers around the world published an open letter calling for a worldwide ban on such technology. And that’s why the United Nations in 2018 discussed if and how to regulate so-called “killer robots.

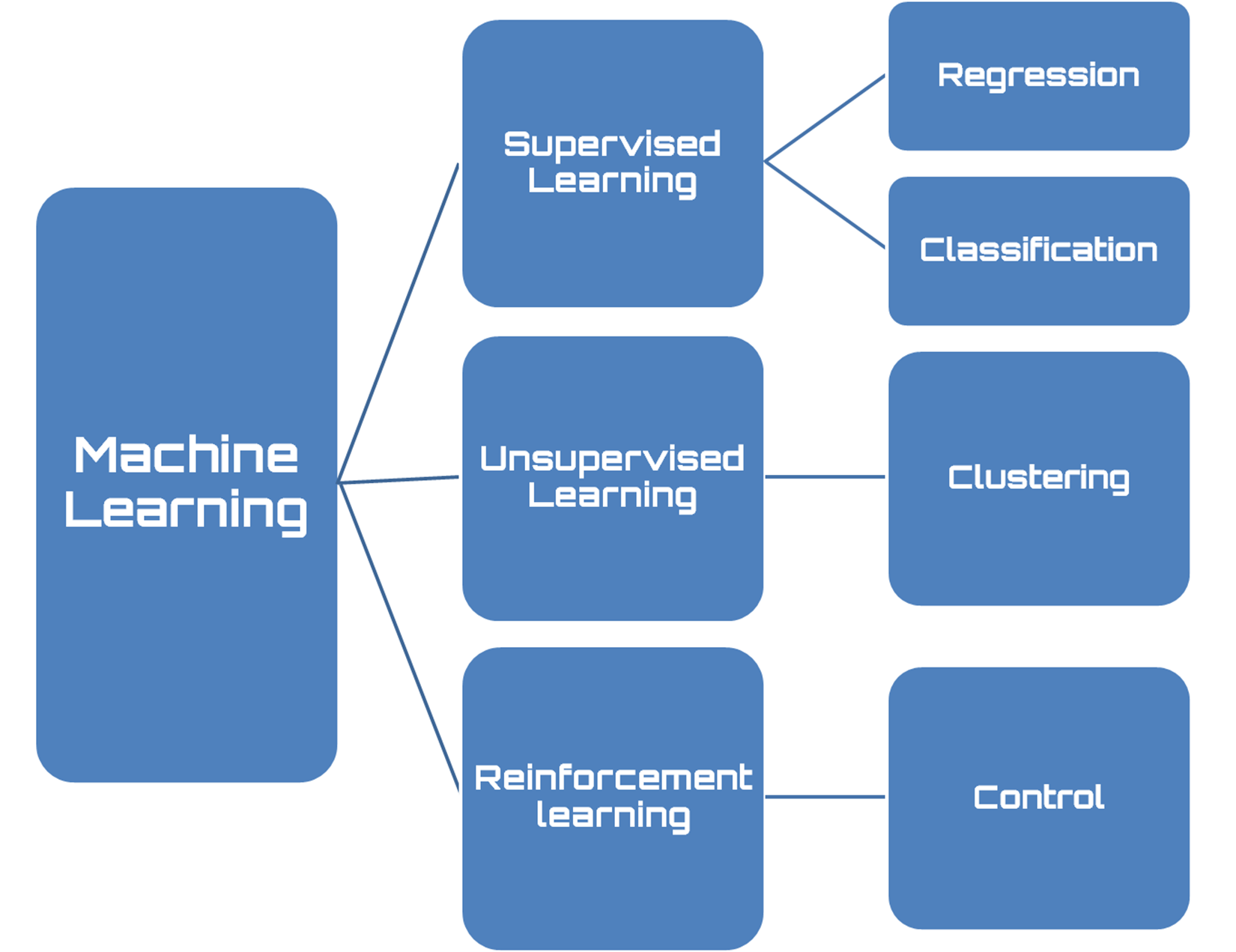

Though these robots wouldn’t need to develop a will of their own to kill, they could be programmed to do it. Neural nets use machine learning, in which they train themselves on how to figure things out, and our puny meat brains can’t see the process.

The big problem is that even computer scientists who program the networks can’t really watch what’s going on with the nodes, which has made it tough to sort out how computers actually make their decisions. The assumption that a system with human-like intelligence must also have human-like desires, e.g., to survive, be free, have dignity, etc.

There’s absolutely no reason why this would be the case, as such a system will only have whatever desires we give it.

If an AI system can be criminally liable, what defense might it use?

For example: The machine had been infected with malware that was responsible for the crime.

The program was responsible and had then wiped itself from the computer before it was forensically analyzed.

So can robots commit crime? In short: Yes.

If a robot kills someone, then it has committed a crime (actus reus), but technically only half a crime, as it would be far harder to determine mens rea.

How do we know the robot intended to do what it did? Could we simply cross-examine the AI like we do a human defendant?

Then a crucial question will be whether an AI system is a service or a product.

One thing is for sure: In the coming years, there is likely to be some fun to be had with all this by the lawyers—or the AI systems that replace them.

How would we go about proving an autonomous machine was justified in killing a human in self-defence or the extent of premeditation?

Even if you solve these legal issues, you are still left with the question of punishment.

In such a situation, however, the robot might commit a criminal act that cannot be prevented.

doing so when no crime was foreseeable would undermine the advantages of having the technology.

What’s a 30-year jail stretch to an autonomous machine that does not age, grow infirm or miss its loved ones means’ nothing. Robots cannot be punished.

LET’S LOOK AT THE HYPOTACIAL TRIAL.

CASE NO 0.

PRESIDING JUDGES: – QUANTUM AI SUPREMA COMPUTER JUDGE NO XY.

JUDGE HAROLD. WISE HUMAN / UN JUDGE AND JAMES SORE HUMAN RIGHT JUDGE.

PROSECUTOR: DATA POLICE OFFICER CONTROLLED BY International Humanitarian Law:

DEFENSE WITNESSES’ TECHNOLOGY’S MICROSOFT- APPLE – FACEBOOK – TWITTER – INSTAGRAM – SOCIAL MEDIA – YOUTUBE – GOOGLE – TIK TOK.

JURY: 8 MEMBERS VIRTUAL REALITY METAVERSE – 2 APPLE DATA COLLECTION ADVISER’S 1000 SMART PHONE HOLDERS REPRESENTING WORLD RELIGIONS AND HUMAN RIGHTS.

THE COURT: Bodily pleas, Seventeenth Anatomical Circuit Court.

“All rise.”

Would the accused identify itself to the court.

I am X 1037 known to my owner by my human name TODO.

Conceived on the 9th April 2027 at Renix Development / Cloning Inc California, programmed to be self learning with all human history, and all human legality.

In order to qualify as a robot, I have electronics chips – covering Global Positioning System (GPS) Face recognition. I have my own social media accounts on Twitter, Facebook and Instagram. I am an important symbol of trust relationship with humans. I can not feel pain, happiness and sadness.

I was a guest of honour at a First Nation powwow on human values against AI in Geneva.

THE CHARGE: ON THE 30TH JULY 2029 YOU X 1037 WITH PREMEDITATION MURDERED MR BROWN.

You erroneously identified a person as a threat to Mrs White and calculated that the most efficient way to eliminate this threat was by pushing him, resulting in his death.

HOW TO YOU PELA, GUILTY OR NOT GUILTY.

NOT GUILTY YOUR HONOR.

The Defense opening statement:

The key question here is whether the programmer of the machine knew that this outcome was a probable consequence of its use.

Is there a direct liability. This requires both an action and an intent by my client X 1037.

We will show that my client had no human mens rea.

He both completed the action of assaulting someone and had no intention of harming them, or knew harm was a likely consequence of his action. An action is straightforward to prove if the AI system takes an action that results in a criminal act or fails to take an action when there is a duty to act.

The task is not determining whether in fact he murdered someone; but the extent to which that act satisfies the principle of mens rea.

Technically he has committed only half a crime, as he had no intended to do what he did.

Like deception, anticipating human action requires a robot to imagine a future state. It must be able to say, “If I observe a human doing x, then I can expect, based on previous experience, that she will likely follow it up with y. Then, using a wealth of information gathered from previous training sessions, the robot generates a set of likely anticipations based on the motion of the person and the objects she or he touches.

The robot makes a best guess at what will happen next and acts accordingly.

To accomplish this, robot engineers enter information about choices considered ethical in selected cases into a machine-learning algorithm.

Having acquired ethics my client X 1037 did exactly that.

IN ACCORDANCE WITH HIS PROGRAMMING TO DEFEND HIMSELF AND HUMANS.

Danger, danger! Mrs White, Mr Brown who was advancing with a fire axe was pushed backwards by my client. He that is Mr brown fell backwards hitting his head on a laptop resulting in his death.

There is no denying the event as it is recorded with his cameras on my clients hard disk.

However the central question to be answers at this trial is, when a robot kills a human, who takes the blame?

We argue that the process of killing (as with lethal autonomous weapon systems (LAWS) is always a systematized mode of violence in which all elements in the kill chain—from commander to operator to target—are subject to a technification.

For example:

Social media companies are responsible for allowing the Islamic State to use their platforms to promote the killing of innocent civilians.

WHY NOT A MURDER.

As my client is a self learning intelligent technology so it is inevitable that he will learn to by-passes direct human control for which he cannot be held responsible for.

Without AI bill of rights, clearly, our way of approaching this doesn’t neatly fit into society’s view of guilt and justice. Once you give up power to anatomical machines you’re not getting it back.

Much of our current law assumes that human operators are involved when in fact programs that govern Robotic actions are self learning.

Targets are objectified and stripped of the rights and recognition they would otherwise be owed by virtue of their status as humans dont apply

Sophisticated AI innovations through neural networks and machine learning, paired with improvements in computer processing power, have opened up a field of possibilities for autonomous decision-making in a wide range of not just military applications, but includes the targeting of an adversaries.

Mr Brown was a threatening adversarie.

.In essence the court has no administrative powers over self learning Technology. The power of dominant social media corporations to shape public discussion of the important issues will GOVERNED THE RESULT OF THIS TRIAL.

Prosecution: Opening statement.

The prospect of losing meaningful human control over the use of force is totally unacceptable.

We may have to limit our emotional response to robots but it is important that the robots understand ours. If a robot kills someone, then it has committed a crime (actus reus)

The fact that to-day it is possible that unknowingly and indirectly, like screws in a machine, we can be used in actions, the effects of which are beyond the horizon of our eyes and imagination, and of which, could we imagine them, we could not approve—this fact has changed the very foundations of our moral existence.

What we are really talking about when we talk about whether or not robots can commit crimes is “emergence” – where a system does something novel and perhaps good but also unforeseeable, which is why it presents such a problem for law.

Technology has the power to transform our society, upend injustice, and hold powerful people and institutions accountable. But it can also be used to silence the marginalized, automate oppression, and trample our basic rights.

Tech can be a great tool for law enforcement to use, however the line between law enforcement and commercial endorsement is getting blurry.

If you withdrew your support, rendered your support ineffective, and informed authorities, you may show that you were not an accomplice to the murder.

Drawing on the history of systematic killing, we will not only argue that lethal autonomous weapons systems reproduce, and in some cases intensify, the moral challenges of the past. If we humans are to exist in a world run by machines these machines cannot be accountable to themselves but to human laws..

A robot may not injure a human being or, through inaction, allow a being to come to harm.

We will be demonstrating the “guilty mind” of a non-human.

This can be done by referring to and adapting existing legal principles.

It is hard not to develop feelings for machines but we’re heading towards in the future, something that will one day hurt us. We are at a pivotal point where we can choose as a society that we are not going to mislead people into thinking these machines are more human than they are.

We need to get over our obsession with treating machines as if they were human.

People perceive robots as something between an animate and an inanimate object and it has to do with our in-built anthropomorphism.

Systematic killing has long been associated with some of the darkest episodes in human history.

When humans are “knit into an organization in which they are used, not in their full right as responsible human beings, but as cogs and levers and rods, it matters little that their raw material is flesh and blood.

Critically though, there are limits on the type and degree of systematization that are appropriate in human conduct, especially when it comes to collective violence or individual murder by a Robotics.

Within conditions of such complexity and abstraction, humans are left with little choice but to trust in the cognitive and rational superiority of this clinical authority.

Cold and dispassionate forms of systematic violence that erode the moral status of human targets, as well as the status of those who participate within the system itself must be held legally accountable.

Increasingly, however, it is framed as a desirable outcome, particularly in the context of military AI and lethal autonomy. The increased tendency toward human technification (the substitution of technology for human labor) and systematization is exacerbating the dispassionate application’s of lethal force and leading to more, not less, violence.

Autonomous violence incentivizing a moral devaluation of those targeted and eroding the moral agency of those who kill, enabling a more precise and dispassionate mode of violence, free of the emotion and uncertainty that too often weaken compliance with the rules and standards of war and murder.

This dehumanization is real, we argue, but impacts the moral status of both the recipients and the dispensers of autonomous violence. If we are allowing the expansion of modes of killing rather than fostering restraint Robots will kill whether commanded to do or not.

The Defence claim that X 1037 is not responsible for its actions due to coding of its electronics by external companies. Erasing the line into unethical territory such as responsibility for murder.

We know that these machines are nowhere near the capabilities of humans but they can fake it, they can look lifelike and say the right thing in particular situations. However, as we see with this murder the power gained by these companies far exceeds the responsibilities they have assumed.

A robot can be shown a picture of a face that is smiling but it doesn’t know what it feels like to be happy.

The people who hosted the AI system on their computers and servers are the real defendants.

PROSECUTION FIRST WITNESS: SOCIAL MEDIA / INTERNET.

We call on the resentives of these companies who will clearly demonstrate this shocking asymmetry of power and responsibility.

These platforms are impacting our public discourse, and this action brings much-needed transparency and accountability to the policies that shape the social media content we consume every day, aiding and abetting the deaths AND NOW MURDER.

While the pressure is mounting for public officials to legally address the harms social media causes. This murder is not nor will ever be confined to court rulings or judgements, treating human beings as cogs in a machine does not and should not give a Punch’s Pilot dispensation even if any boundaries that could help define Tech remain blurred. Technology companies that reign supreme in this digital age are not above the law.

In order to grasp the enormous implications of what has begun to happen and how all our witnesses are connected and have contributed to this murder.

To close our defence we will conclude with observations on why we should conceptualize certain technology-facilitated behaviors as forms of violence. We are living in one of the most vicious times in history. The only difference now is our access to more lethal weapons.

We call.

Facebook.

Is it not true you allowed terrorists group to use your platform, allowed unrestrained hate speech, inciting, among other things, the genocide in Myanmar. Drug cartels and human traffickers in developing countries using the platform, The platform’s algorithm is designed to foster more user engagement in any way possible, including by sowing discord and rewarding outrage.

In chooses profit over safety it contributed to X 1037 self learning.

Facebook is a uniquely socially toxic platform. Facebook is no longer happy to just let others use the news feed to propagate misinformation and exert influence – it wants to wield this tool for its own interests, too. Facebook is attempting to pave the way for deeper penetration into every facet of our reality.

Facebook would like you to believe that the company is now a permanent fixture in society. To mediate not just our access to information or connection but our perception of reality with zero accountability is the worst of all possible options. Something like posting a holiday photo to Facebook may be all that is needed to indicate to a criminal that he person is not at home.

We call.

Instagram Facebook sister company App.

Instagram is all about sharing photos providing a unique way of displaying your Profile. Instagram is a place where anyone can become an Influence. These are pretty frightening findings and are only added to by the fact that “teens blame Instagram for increases in the rate of anxiety and depression.

What makes Instagram different from other social media platforms is the focus on perfection and the feeling from users that they need to create a highly polished and curated version of their lives. Not only that, but the research suggested that Instagram’s Explore page can push young users into viewing harmful content, inappropriate pictures and horrible videos.

In a conceptualization where you are only worth what your picture is, that’s a direct reflection of your worth as a person.

That becomes very impactful.

X 1037 posted a selfie on the 12 May 2025 to see his self-worth. Within minutes he received over 5 million hate and death threats. Its no wonder when faces with Mr Brown that he chose self preservation.

We call Twitter. Elon Musk

This platform is notorious catalyst for some of the most infamous events of the decade: Brexit, the election of Donald Trump, the Capitol Hill riots. Herein lies the paradox of the platform. The infamous terror group – which is now the totalitarian theocratic ruling party of Afghanistan — has made good use of Twitter.

A platform that has done its very best to avoid having to remove any videos from racists, white supremacists and hate mongers.

We call TikTok.

A Chinese social video app known for its aggressive data collection can access while it’s running, a device location, calendar, contacts, other running applications, wi-fi networks, phone number and even the SIM card serial number.

Data harvesting to gain access to unimaginable quantities of customer data, using this information unethically. Data can be a sensitive and controversial topic in the best of times. When bad actors violate the trust of users there should be consequences, and there are results. This data can also be misused for nefarious purposes in the wrong hands. The same capability is available to organised crime, which is a wholly different and much more serious problem, as the laws do not apply. In oppressive regimes, these tools can be used to suppress human rights.

X 1037 held an account, opening himself to influences beyond his programming.

We call Google

Truly one of the worst offenders when it comes to the misuse of data.

Given large aggregated data sets and the right search terms, it’s possible to find a lot of information about people; including information that could otherwise be considered confidential: from medical to marital.

Google data mining is being used to target individuals. We are all victims of spam, adware and other unwelcome methods of trying to separate us from our money. As storage gets cheaper, processing power increases exponentially and the internet becomes more pervasive in everyone’s lives, the data mining issue will just get worse. X 1037 proves this.

We call. YouTube/Netflix.

Numerous studies have shown that the entertainment we consume affects our behavior, our consumption habits, the way we relate to each other, and how we explore and build our identity.

Digital platforms like Netflix have a strong impact on modern society.

Violence makes up 40% of the movie sections on Netflix. Understanding what type of messages viewers receive and the way in which these messages can affect their behavior is of vital importance for an effective understanding of today’s society.

Therefore, it must be considered that people are the most susceptible to imitating the attitudes. Content related to mental health, violence, suicide, self-harm, and Human Immunodeficiency Virus (HIV) appears in the ten most-watched movies and ten most-watched series on Netflix.

Their appearance on the media is also considered to have a strong impact on spectators. X 1037 spent most of his day watching and self learning from movies.

Violence affects the lives of millions of people each year, resulting in death, physical harm, and lasting mental damage. It is estimated that in 2019, violence caused 475,000 deaths.

Netflix in particular, due to their recent creation and growth, have not yet been studied in depth.

Considering the impact that digital platforms have on viewers’ behaviors its once again no wonder that X 1037 did what he did.

There is no denying that these factors should be forcing the entertainment and technology industries to reconsider how they create their products which are have a negative long-term influence on various aspects of our wider life and development.

We call

Instagram.

Instagram if you are capitalizing off of a culture, you’re morally obligated to help them. As a result of “social comparison, social pressure, and negative interactions with other people you are promoting harm.

We call.

Apple.

Smartphones have developed in the last three decades now an addiction leading to severe depression, anxiety, and loneliness in individuals.

People are now using smartphones for their payments, financial transactions, navigating, calling, face to face communication, texting, emailing, and scheduling their routines. Nowadays, people use wireless technology, especially smartphones, to watch movies, tv shows, and listen to music.

We know the devices are an indispensable tool for connecting with work, friends and the rest of the world. But they come with trade-offs—from privacy issues to ecological concerns to worries over their toll on our physical and emotional health. Spurring a generation unable to engage in face-to-face conversations and suffering sharp declines in cognition skills.

We’re living through an interesting social experiment where we don’t know what’s going to happen with kids who have never lived in a world without touchscreens. X 1037 would not have been present at the murder scene only that he was responding to a phone call from Mrs White Apple 19 phone.

Society will continue struggling to balance the convenience of smartphones against their trade-offs.

We call.

Microsoft.

Two main goals stand out as primary objectives for many companies: a desire for profitability, and the goal to have an impact on the world. Microsoft is no exception. Its mission as a platform provider is to equip individuals and businesses with the tools to “do more.” Microsoft’s platform became the dev box and target of a massive community of developers who ultimately supplied Windows with 16 million programs. Multibillion-dollar companies rely on the integrity and reliability of Microsoft’s tools daily.

It is a testimony to the powerful role Microsoft plays in global affairs that its tools are relied upon by governments around the world.

Microsoft’s position of global influence gives its leadership a voice on matters of moral consequence and humanitarian concern. Microsoft is a company built on a dream.

Microsoft’s influence raises some concerns as well. It’s AI-driven camera technology that can recognize, people, places, things, and activities and can act proactively has a profound capacity for abuse by the same governments and entities that currently employ Microsoft services for less nefarious purposes.

Today, with the emerging new age, which is most commonly—and inaccurately—called “the digital age”, have already transformed parts of our lives, including how we work, how we communicate, how we shop, how we play, how we read, how we entertain ourselves, in short, how we live and now will die.

It would be economic and political suicide for regulators to kneecap the digital winners.

COURTS VERDICT :

Given the absence of direct responsibility, the court finds X 1037 not guilty.

MR BROWN DEATH caused by a certain act or omission in coding.

THE COURT DISMISSES THE CASE AGAINST THE TECHNOLOGICAL COMPANIES. ON THE GROUDS OF INSUFFICIENT EVIDENCE.

Neither the robot nor its commander could be held accountable for crimes that occurred before the commander was put on notice. During this accountability-free period, a robot would be able to commit repeated criminal acts before any human had the duty or even the ability to stop it.

Software has the potential to cause physical harm.

To varying extents, companies are endowed with legal personhood. It grants them certain economic and legal rights, but more importantly it also confers responsibilities on them. So, if Company X builds an autonomous machine, then that company has a corresponding legal duty.

The problem arises when the machines themselves can make decisions of their own accord. As AI technology evolves, it will eventually reach a state of sophistication that will allow it to bypass human control. The task is not determining whether it in fact murdered someone; but the extent to which that act satisfies the principle of mens rea.

However if there were no consequences for human operators or commanders, future criminal acts could not be deterred so the court FINES EACH AND EVERY COMPANY 1 BILLION for lack of attention to human details

We must confront the fact that autonomous technology with the capacity to cause harm is already around.

The pain that humans feel in making the transition to a digital world is not the pain of dying. It is the pain of being born.

What would “intent” look like in a machine mind? How would we go about proving an autonomous machine was justified in killing a human in self-defence or the extent of premeditation?

Given that we already struggle to contain what is done by humans. What would building “remorse” into machines say about us as their builders?

At present, we are systematically incapable of guaranteeing human rights on any scale.

We humans have already wiped out a significant fraction of all the species on Earth. That is what you should expect to happen as a less intelligent species – which is what we are likely to become, given the rate of progress of artificial intelligence. If you have machines that control the planet, and they are interested in doing a lot of computation and they want to scale up their computing infrastructure, it’s natural that they would want to use our land for that. This is not compatible with human life. Machines with the power and discretion to take human lives without human involvement are politically unacceptable, morally repugnant, and should be prohibited by international law.

If you ask an AI system anything, in order to achieve that thing, it needs to survive long enough

Fundamentally, it’s just very difficult to get a robot to tell the difference between a picture of a tree and a real tree.

X 1037 now, it has a survival instinct.

When we create an entity that has survival instinct, it’s like we have created a new species. Once these AI systems have a survival instinct, they might do things that can be dangerous for us.

So, what’s wrong with LAWS, and is there any point in trying to outlaw them?

Some opponents argue that the problem is they eliminate human responsibility for making lethal decisions. Such critics suggest that, unlike a human being aiming and pulling the trigger of a rifle, a LAWS can choose and fire at its own targets. Therein, they argue, lies the special danger of these systems, which will inevitably make mistakes, as anyone whose iPhone has refused to recognize his or her face will acknowledge.

In my view, the issue isn’t that autonomous systems remove human beings from lethal decisions, to the extent that weapons of this sort make mistakes.

Human beings will still bear moral responsibility for deploying such imperfect lethal systems.

LAWS are designed and deployed by human beings, who therefore remain responsible for their effects. Like the semi-autonomous drones of the present moment (often piloted from half a world away), lethal autonomous weapons systems don’t remove human moral responsibility. They just increase the distance between killer and target.

Furthermore, like already outlawed arms, including chemical and biological weapons, these systems have the capacity to kill indiscriminately. While they may not obviate human responsibility, once activated, they will certainly elude human control, just like poison gas or a weaponized virus.

Oh, and if you believe that protecting civilians is the reason the arms industry is investing billions of dollars in developing autonomous weapons, I’ve got a patch of land to sell you on Mars that’s going cheap.

There is, perhaps, little point in dwelling on the 50% chance that AGI does develop. If it does, every other prediction we could make is moot, and this story, and perhaps humanity as we know it, will be forgotten. And if we assume that transcendentally brilliant artificial minds won’t be along to save or destroy us, and live according to that outlook, then what is the worst that could happen – we build a better world for nothing?

The Company that build the autonomous machine, Renix Development has a corresponding legal duty.

—————

Because these robots would be designed to kill, someone should be held legally and morally accountable for unlawful killings and other harms the weapons cause.

Criminal law cares not only about what was done, but why it was done.

- Did you know what you were doing? (Knowledge)

- Did you intend your action? (General intent)

- Did you intend to cause the harm with your action? (Specific intent)

- Did you know what you were doing, intend to do it, know that it might hurt someone, but not care a bit about the harm your action causes? (Recklessness)

- So, the question must always be asked when a robot or AI system physically harms a person or property, or steals money or identity, or commits some other intolerable act: Was that act done intentionally?

- There is no identifiable person(s) who can be directly blamed for AI-caused harm.

- There may be times where it is not possible to reduce AI crime to an individual due to AI autonomy, complexity, or limited explainability. Such a case could involve several individuals contributing to the development of an AI over a long period of time, such as with open-source software, where thousands of people can collaborate informally to create an AI.

The limitations on assigning responsibility thus add to the moral, legal, and technological case against fully autonomous weapons/ Robotics, and bolster the call for a ban on their development production, and use. Either way, society urgently needs to prevent or deter the crimes, or penalize the people who commit them.

There is no reason why an AI system’s killing of a human being or destroying people’s livelihoods should be blithely chalked up to “computer malfunction.

Because proving that these people had “intent” for the AI system to commit the crime would be difficult or impossible.

I’m no lawyer. What can work against AI crimes?

All human comments appreciate. All like clicks and abuse chucked in the bin.

Contact: bobdillon33@gmail.com

%2Fimg%2Fiea%2F3oOp0Q05OW%2Fquantum-communications.jpg&w=3840&q=75)